Exploiting the Integrated ISR Systems of the Future

Monitoring the mission in real-time, the commander scrutinises the all-source intelligence picture for signs that the unmanned strike package had been detected.

A warning suddenly appears on the display, indicating a previously unknown SAM system has switched on its radar.

The element of surprise will be ruined if action is not taken immediately.

The commander’s AI system launches a query into a massive dataset of real-time enemy location data. An alternative, optimised flight-path is automatically generated, enabling the unmanned aircraft to swiftly change course.

No statistically significant changes in the enemy’s posture is registered by the AI.

The strike mission continues undetected through the complex air defence system.

When it comes to intelligence, surveillance and reconnaissance (ISR) systems, the exploitation of big data and AI for situational awareness is described as revolutionary for command and control (C2).[1] Future ISR systems, also known as the sensing grid, plan to use AI to autonomously consolidate all-source information into a single operational picture.[2]

This all-source, fuzed picture is then used in conjunction with a command grid; a sophisticated AI to rapidly generate enemy courses of action (COA) for commanders and recommend responses.[3]

The strategy behind this is clear; if realised, the sensing grid will enable a C2 system to observe, orient, decide and act (OODA) at machine-speeds, improving the chances of gaining a decisive advantage over the enemy.

All major powers, including potential adversaries, have development programs aimed at achieving a sensing grid.[4] If the Australian Defence Force (ADF) is to be prepared for conflict against adversaries with a sensing grid, it will be required to develop new capabilities in order to deny the enemy to achieve a machine-speed OODA loop.

A counter-AI strategy will enable the ADF to achieve this, significantly lowering the effectiveness of the enemy C2 by exploiting the vulnerabilities of AI.

How should the ADF develop these counter-AI capabilities, and what are the vulnerabilities of the sensing grid?

Very little has been written about the potential vulnerabilities of AI use within C2 and ISR systems. Most commentators state that AI will herald an unparalleled ability to increase OODA loop speed and open new innovative approaches to warfare for commanders.

However, sensing grid systems are not without weakness, nor are they impervious to deception or surprise. AI will not, in the foreseeable future, achieve the ‘general intelligence’ of the human mind, and will therefore not understand the context behind an ISR picture it is observing. Instead, machines will speedily calculate a large number of statistical predictions using a system of complex machine learning (ML) algorithms – a process whereby large data sets are mined for patterns.

The system will have been specifically trained to detect anomalies from adversary military systems and to base its predictions solely on probability from the data it has been trained on.

Therefore, by focusing intelligence efforts on understanding and manipulating the adversaries algorithm design or training data, the ADF can develop capabilities for targeting specific AI vulnerabilities.

To appreciate the potential weaknesses of the sensing grid, we will need to understand the vulnerabilities of its supporting AI. Put simply, AI is the ‘ability of machines to perform tasks that normally require human intelligence.’[5]

This is accomplished through the use of algorithms, which in the context of AI, is an attempt to translate human thought processes into computer code. This code outlines the rules that the AI must follow; often a complex system of binary if-then logic.

For decades, AI coding rules were built manually, such as DEEP BLUE, where AI designers leveraged the expertise of chess masters to code the AI.[6] This method has limitations, as some tasks are too complex to be coded manually. However, significant advancements in big data and computational power have resulted in ML algorithms becoming viable for more complex human tasks.[7]

Unlike traditional algorithms, AI built with ML has code that is learned from datasets; this approach works well if data is available. The problem for the sensing grid AI, however, is that much of the data will need to be simulated; no real data exists for the wars of the future.

Therefore, the use of AI does not remove the human factor.

Regardless of which AI used, algorithm rules within an adversary sensing grid will be designed by humans and maintain the vulnerabilities of traditional ISR systems; reflecting the beliefs, culture and biases of those that create them.

By targeting the vulnerabilities of AI design, the ADF can increase friction into the sensing grid system.

Figure 1 depicts a sensing grid at work.

The AI will not be a single entity, but will be a complex system of ML algorithms designed to predict enemy behaviour through automated analyses of indicators and warnings (I&W).[9] Starting from the left, the diagram depicts a collection of all-source data being funnelled into a format-specific ML algorithm (image, text, signal, etc.) for analysis. Each algorithm will continuously learn and update itself using real-time data streaming to minimise prediction error.

The output from each algorithm is then fused via another ML algorithm to create a single ISR picture for the commander’s use.

This advanced AI system will then attempt to generate predictions about the enemy’s COA using the integrated ISR picture, allowing for a final ML algorithm to recommend a response to the commander for action.[10]

This means that if one phase in the OODA loop contains inaccuracies, then so too will each phase thereafter.

Which phase then is the most vulnerable?

The critical vulnerability in the sensing grid is the orient phase of the OODA loop.

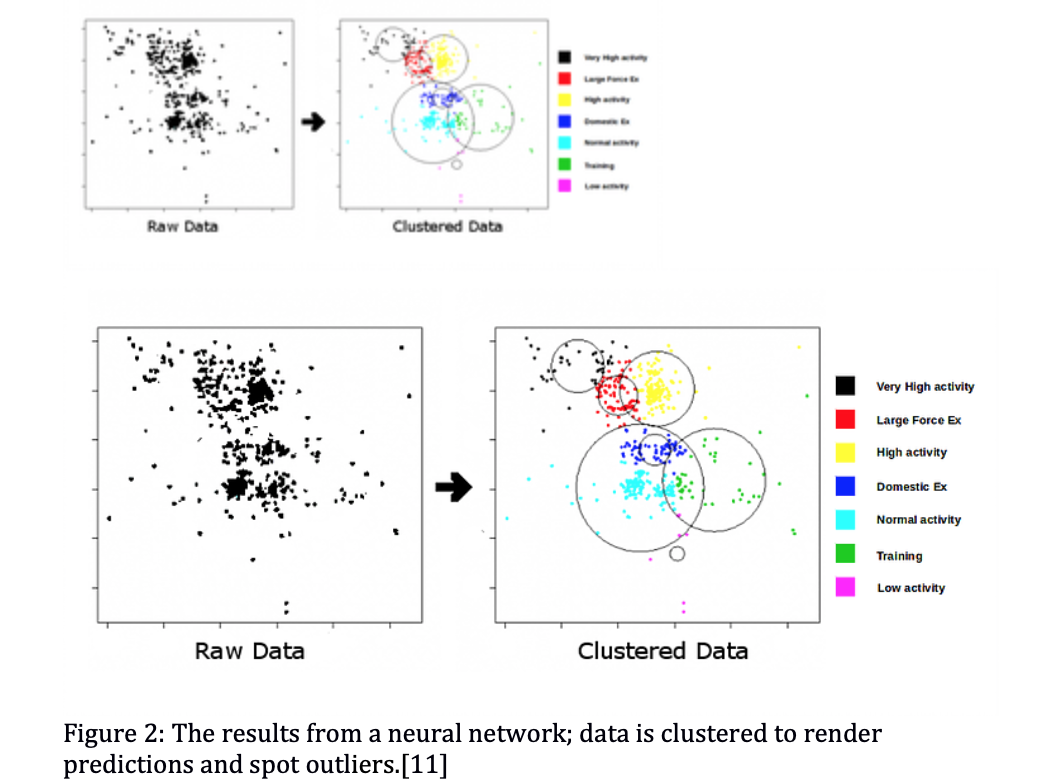

Figure 2 depicts the result of an all-source fused data set that trained a ML algorithm for the orient phase. This particular example is a simplified output of a semi-supervised neural network algorithm designed to classify behaviour and detect any changes in activity. The algorithm clusters real-time data according to predefined attributes known as ‘features’, such as the number of enemy flights, movements of naval vessels, detected radar signals, etc. It is the patterns established between the features that provide the algorithm with the information needed to accurately predict adversary actions.[12]

Each datum is assigned a probability that marks it as belonging to a specific cluster, thus flagging it for a specific action if required. For a commander observing an operating area, for example, the algorithm will autonomously display flights that constitute a ‘normal behaviour’ cluster, while identifying a long-range patrol elsewhere and flagging it as an outlier requiring attention.

By preventing the orient phase ML algorithm from accurately clustering data, it may allow deception or surprise against a sensing grid system; sowing confusion and distrust in the AI recommendations to the commander.

The question then becomes: how does the ADF ensure inaccurate data is being utilised by the enemy AI?To answer this, we need to explore adversarial ML techniques such as ‘data poisoning’, ‘evasion’, and ‘model corruption’. All three can be used as counter-AI tactics by the ADF for exploiting the enemies sensing grid.

Counter-AI Tactic: Data Poisoning

A critical weakness of the sensing grid AI is that it is susceptible to inaccurate predictions during the initial stages of a conflict. That is because the AI can only be as accurate as the parameters and data it was assigned and trained with prior to going live. During the initial research and development stage of a sensing grid, AI designers select the ML models, specify which features to be used for predictions, and then train it accordingly.[13] Since the actual real data for all possible enemy courses of action (COA) does not exist, the datasets used for training the sensing grid are likely to be synthetic – i.e. ‘simulated’. These simulated models will be based primarily on intelligence assessments and beliefs about the enemy.

Therefore, if the training dataset or simulation fails to accurately reflect an adversary’s COA during the initial stages of conflict, the AI’s predictions will likely be inaccurate until it updates and adjusts itself to new adversary behaviour.[14]

This delay in accuracy may result in less optimal responses from the AI during the critical initial stages of a conflict.

Information warfare’s objective of targeting the beliefs and assumptions of the adversary will remain, but in this context the end goal changes to the denial of accurate data for ML training.

Therefore, to ensure a slowed OODA loop at the start of conflict, the ADF should focus on encouraging a potential adversary to train its sensing grid on inaccurate data. This will require traditional counterintelligence and strategic deception efforts to shape an adversaries beliefs; essentially encouraging them to poison their own data.

Another option, would be through forced data poisoning with cyber-attacks, whereby false data is embedded into an AI’s training dataset to weaken its predictive accuracy.

Thus generating inaccurate predictions, even if the enemy beliefs and intelligence assessments are accurate.

Counter-AI Tactic: Evasion and Model Corruption

Data poisoning may grant an initial advantage, but it will not last long; the sensing grid system will learn from its mistakes and adjust to ADF actions. During conflict, knowledge of the ML’s orient phase algorithm could render the system open to outside manipulation and be more exploitable than a human.

By identifying the parameters responsible for triggering an AI’s change detector, one could develop counter-AI tactics to conduct operations beneath the classification threshold of threatening output. For example, we may discover that the number of RAAF air mobility fights per day has been designated as afeature within an enemy’s ML algorithm, with sudden upward trends in activity accorded a high probability of signalling attack preparation.

Such intelligence could be used to strategically conduct air mobility flights below the known classification threshold to effectively circumvent the opponent’s I&W system.

These tactics could be tested against a simulated enemy sensing gird if we are able to acquire sufficient knowledge of how theirs was built.

Alternatively, if the AI is set up to continuously learn from real-time data streaming, we could affect false patterns of life, or lower its sensitivity to certain features, thereby corrupting an adversary’s algorithm into classifying abnormal behaviour as normal. This becomes especially important as a form of long-term preparation for critical operations, examples of which are already occurring in cyberspace today.

AI is used for cyber-defence to detect and prevent attacks against security systems by differentiating between normal and anomalous patterns of behaviour within the network. The challenge is when cyber attackers know how cyber defence works, resulting in attacks designed to manipulate the algorithm’s threshold of detection.

These include ‘slow attacks’ in which an intruder gradually inserts their presence into a targeted network over time, thereby corrupting the security ML algorithm into perceiving its presence as normal.15 Such deceptive techniques can be implemented against a sensing grid system that has grown overly reliantupon its AI.

The sensing grid’s reliance on AI creates a vulnerability that can be exploited through a counter-AI strategy. Encouraging and enabling the enemy’s loss of trust in its own ISR system serves as the central goal for a counter-AI strategy.

A single successful operation in deception may be all that is required to permanently impair an adversary’s trust in its AI predictions.

With system outputs and predictive accuracy plagued by second-guessing, the adversary’s OODA loop slows down to a degree that eventuates the system’s under-utilisation, thereby providing the ADF with the advantage.

Jacob Simpson is a Flying Officer in the Royal Australian Air Force. He holds a Masters in Strategic Studies from the Australian National University and is currently undertaking a Masters in Decision Analytics at the University of New South Wales.

References

- En, T. (2016). Swimming In Sensors, Drowning In Data— Big Data Analytics For Military Intelligence. Journal of the Singapore Armed Forces, 42(1).

- USAF (2018). Air Force Charts Course for Next Generation ISR Dominance. [online] U.S. Air Force. Available at: https://www.af.mil/News/Article-Display/Article/1592343/air-force-charts-course-for-next-generation-isr-dominance/.

- Layton, P. (2017). Fifth Generation Air Warfare. [online] Air Power Development Centre. Available at: http://airpower.airforce.gov.au/APDC/media/PDF-Files/Working%20Papers/WP43-Fifth-Generation-Air-Warfare.pdf.

- Pomerleau, M. (2018). In threat hearing, DoD leaders say data makes an attractive target. [online] C4ISRNET. Available at: https://www.c4isrnet.com/intel-geoint/2018/02/13/in-threat-hearing-dod-leaders-say-data-makes-an-attractive-target/ [Accessed 10 May 2020].

- Allen, G. (2020). Understanding AI Technology. [online] Joint Artificial Intelligence Center. Available at: https://www.ai.mil/docs.Understanding%20AI%20Technology.pdf.

- RSIP Vision (2015). Exploring Deep Learning & CNNs. [online] RSIP Vision. Available at: https://www.rsipvision.com/exploring-deep-learning/.

- Whaley, R. (n.d.). The big data battlefield. [online] Military Embedded Systems. Available at: http://mil-embedded.com/articles/the-big-data-battlefield/

- DARPA (2014). Insight. [online] www.darpa.mil. Available at: https://www.darpa.mil/program/insight.

- Pomerleau, M. (2017). How the third offset ensures conventional deterrence. [online] C4ISRNET. Available at: https://www.c4isrnet.com/it-networks/2016/10/31/how-the-third-offset-ensures-conventional-deterrence/ [Accessed 10 May 2020].

- Kainkara, S. (2019). Artificial Intelligence and the Future of Air Power. [online] Air Power Development Centre. Available at: Http://airpower.airforce.gov.au/APDC/media/PDF-Files/working&Papers/WP45-Artifical- Intelligence-and-the-Future-of-Air-Power.pdf.

- Ahuja, P. (2018). K-Means Clustering. [online] Medium. Available at: https://medium.com/@pratyush.ahuja10/k-means-clustering-442ed00ca7b8 [Accessed 9 Jun. 2020].

- Richbourg, R. (2018). ‘It’s Either a Panda or a Gibbon’: AI Winters and the Limits of Deep Learning. [online] War on the Rocks. Available at: https://warontherocks.com/2018/05/its-either-a-panda-or-a-gibbon-ai-winters-and-the-limits-of-deep-learning/ [Accessed 10 May 2020].

- Yufeng G (2017). The 7 Steps of Machine Learning. [online] Medium. Available at: https://towardsdatascience.com/the-7-steps-of-machine-learning-2877d7e5548e.

- Mahdavi, A. (2019). Machine Learning and Simulation: Example and Downlaods. [online] Available at: https://www.anylogic.com/blog/machine-learning-and-simulation-example-and-downloads/.

- Beaver, Justin M., Borges-Hink, Raymond C., Buckner, Mark A., An Evaluation of Machine Learning Methods to Detect Malicious SCADA Communications, Extract behavioral and physical biometrics, in the Proceedings of 2013 12th International Conference on Machine Learning and Applications (ICMLA), vol.2, pp.54-59, 2013. doi: 10.1109/ICMLA.2013.105

This article was published by Central Blue on July 11, 2020.