Perspectives on the Introduction of Autonomous Systems into Combat

The Williams Foundation seminar on Next Generation Autonomous systems held on April 8, 2021, began with two presentations which focused on the nature of the challenges of introducing autonomous systems into the combat force.

The first presentation was by Group Captain Jo Brick of the Australian Defence College.

Brick frequently opens up Williams Foundation seminar and provides very helpful orientations to the issues to be discussed at that particular seminar. It was no different with regard to the challenging topic of understanding the nature and the way ahead for next generation autonomous systems.

She highlighted a number of fictional examples of how the machine-man relationship has been envisaged in the future. But she honed on a key aspect of the challenge: how will data flow in the combat force and how will that data be used to make lethal decisions?

She posed a number of key questions facing the way ahead for the introduction and proliferation of autonomous systems into the combat force.

Do we trust the systems that we have created?

Are we expecting them to be perfect, or to accept that they are flawed just like us?

Do we understand autonomous systems enough to information the creation of an effective system of accountability?

How would autonomous and intelligent systems make decisions, free from human intervention?

Would they reflect the best of humanity or something less inspiring?

Does the conduct of war by autonomous and intelligent systems dilute the sanctity of war as a societal function?

Who or what is permitted to fight wars and to take life on behalf of the state?

What does the use of AI and autonomous systems in warfare mean for the profession of arms?

One could add to her questions another one which was discussed directly or indirectly throughout the seminar: What will be the impact of the introduction and proliferation of autonomous systems on the art of warfare?

The second presentation, which followed that of Group Captain Brick, was by Professor Rob McLaughlin from the Australian National Centre for Oceans Resources and Security.

In his presentation, McLaughlin dealt with the broader ethical and legal issues which autonomous systems raise and which need to be resolved in shaping a way ahead for their broader use in the militaries of liberal democratic states.

One should note that another key question is how authoritarian states use and will use them which is not restricted by the ethical concerns of liberal democratic societies. There is always the reactive enemy who will shape their tactics and strategies in ways taking into account how the liberal democratic militaries self-limit with regard to systems, like autonomous ones.

McLaughlin started with the most basic question: What is an autonomous weapon system? He turned to a definition provided by the International Red Cross which defines AWS as follows:

“Any weapon system with autonomy in its critical functions. That is, a weapon system that can select and attack targets without hum intervention. After initial activation by a human operator, the weapons system – through its sensors, software (programming/alogrithms) and connected weapon(s) – takes on the targeting function that would normally be controlled by humans.”

He then added this consideration as well from the International Red Cross as well: ”The key distinction, in our view, from non-autonomous weapons is that the machine self-initiates an attack.”

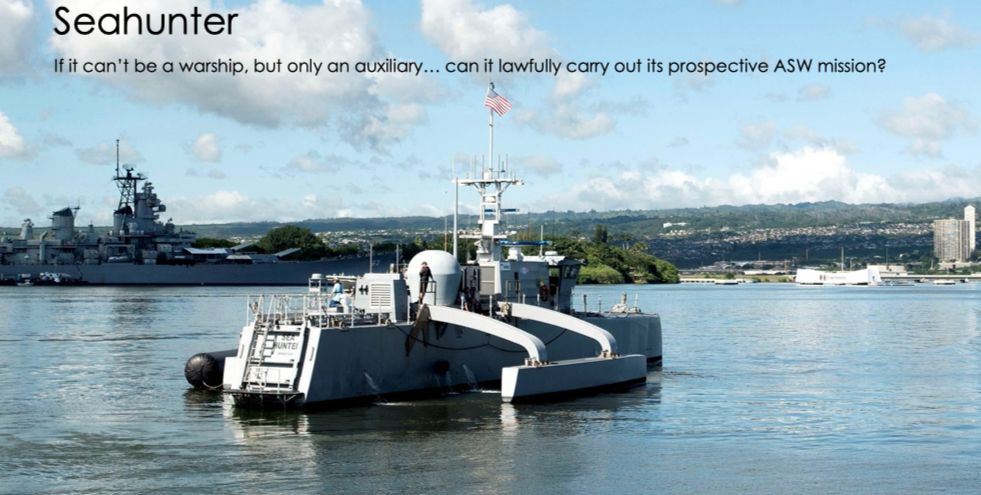

This raises in turn the key consideration of when is the AWS (in legal terms) an independent unit (like a warplane or warship) rather than simply a sensor/weapon?

This in turn raises a further consideration: Can a maritime autonomous weapons system be a warship in terms of international law? According to the Law of the Sea: “For the purposes of this Convention, warship means a ship belonging to the armed forces of a state bearing the external marks distinguishing such ships of its nationality, under the command of an officer duly commissioned by the government of the state and whose name appears in the appropriate service list or its equivalent and manned by a crew which is under regular armed forces discipline.”

Such a definition quickly raises issues for autonomous maritime platforms. Are they marked with the nation’s identification? Are they introduced such as the Germans in World War II did with merchant “warships” without such markings? What does under command mean specifically as autonomous maritime platforms operate? Is there a mother ship which controls them which is crewed by humans? And if uncrewed then what about the manning issue?

He argued that we are not yet at a threshold where these are practical questions impeding development. But we “will meet a frontier at some point – probably with AI and advanced machine learning.”

In short, with autonomous systems on the horizon, a number of questions about how they will impact on the force, and how concepts of operations using these capabilities will face ethical and legal challenges as well.

The briefing slides from both presentations can be viewed below:

01._NGAS Brick02._NGAS McLaughlin