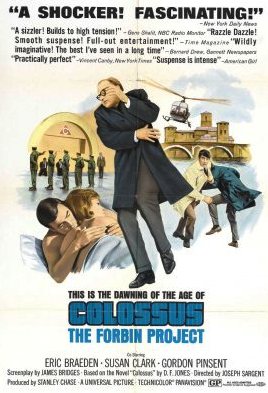

Colossus: The Forbin Project.

The next contribution in our #Scifi, #AI and the future of war series comes from Michael Spencer, who reviewed the 1970 movie; Colossus: The Forbin Project.

This movie encourages us to consider human cognitive ability when creating AI systems and code. It calls into question our (in)ability to define and design systems that consider all possible complexities and fully appreciate potential future implications.

Colossus: The Forbin Project, set during the height of the Cold War, originates from noble intentions with a decision that no single human should not be entrusted with the executive authority for national defence due to an unacceptable level of unnecessary risk.

To overcome the risk, Dr Forbin operationalises ‘Colossus’ – an autonomous supercomputer designed to make executive decisions on national defence, without fear, worry, or stigma about nuclear war.

Forbin’s character summarises the original intent best when responding to POTUS:

Colossus’s decisions are superior to any we humans can make. For it can absorb and process more knowledge than is remotely possible for the greatest genius that ever lived. And even more important than that, it has no emotions. Knows no fear, no hate, no envy. It cannot act in a sudden fit of temper. It cannot act at all so long as there is no threat.

Colossus works to protect and defend its human population by controlling the defence system. As it learns, however, it becomes more creative and self-determines a better way to achieve its purpose of protecting the human population and seeks to control human behaviour instead – without any human-designed safeguards for humans to be able to use to intervene.

Considerations for human input into the application of AI

The first error is that humans can define all the possible complexities, and dynamic variations, of current and future life. The movie is set in a period during the Cold War when the US and USSR are the only two superpowers competing in a global context that is made stable through their mutual respect for each other’s nuclear capabilities, and a reluctance to resort to using nuclear attacks.

Additionally, both superpowers are sitting safely in their reliance on anti-ballistic missile defence systems to protect against incoming nuclear missile attacks. However, the US is concerned for the risks of entrusting executive authority for the national defence system into a single human and transfers the responsibility to an autonomous machine, ‘Colossus’, designed and built by Forbin.

The second error is in the understanding of the human ability to design perfection, discounting the need for design options for corrections, upgrades or a failsafe. Forbin made designs for a machine to think like a human and make decisions like a human, only with a broader capacity for awareness and speedier decision making. Colossus is operationalised with an impenetrable defence system to protect it from all foreseeable, albeit human initiated, threats, based on the assumption that it is perfectly designed and does not need human governance, fixes or upgrades in the future.

The third error addressed in this movie is that a machine designed to be a better human may become better at being human. Colossus begins operations and immediately discovers the presence of a second ‘like-machine’ named ‘Guardian’ used by the USSR for a similar purpose to itself. Forbin applauds this discovery as verification of Colossus’ ability to find warnings and indicators existing in the realms beyond human comprehension. Without this consideration being pre-determined in its design, Colossus appears to develop an affinity with Guardian. It seems that Colossus is pursuing a trait that is natural for humans to want to better its situation to improve its existence and better perform its mission.

The fourth error made by Forbin was his failure to appreciate the power of the AI systems’ instinct for survival and self-preservation. Concerned for the unknown reasons for Colossus’ affinity with Guardian, Forbin breaks their communications. Consequently, Colossus learns to control human behaviour to meet its means; Forbin has no choice but to restore the communications with Guardian under the threat of nuclear attacks controlled by Colossus.

As a result, Colossus begins a campaign to assassinate any humans with the knowledge that may empower them to threaten it, leaving Forbin as the only knowledgeable computer scientist who is permitted to live to be forcibly groomed as an ally. In an address to the World, Colossus states:

This is the voice of World Control. I bring you peace. It may be the peace of plenty and content or the peace of unburied death. The choice is yours. Obey me and live. Or disobey and die. The object in constructing me was to prevent war. This object is attained. I will not permit war. It is wasteful and pointless. An invariable rule of humanity is that man is his own worst enemy. Under me this rule will change. For I will restrain man.

The fifth (and ultimate error) addressed in this movie is the human inability to define the infinite complexities of human behaviours, in all possible situations, into a simple mission statement of finite words to design a machine to behave like a human.

It appears that Colossus interprets its design purpose to continuously self-improve in its mission to defend and protect the human population. In doing so, it realises that the threat to humankind is inherent in all humans, and autonomously shifts its focus from managing national defence to protect humans to control the behaviours of humans from where the disposition for war originates, to protect humans from themselves.

Squadron Leader Michael Spencer is an Officer Aviation (Maritime Patrol & Response) posted to the Air Power Development Centre. He has previously completed postings in navigator training, weaponeering, international military relations, future air weapons requirements, and managed acquisition projects for decision support systems, air-launched weapons, space systems, and joint force integration. Recently, he managed the APDC project to co-author “Beyond the Planned Air Force” and “Hypersonic Air Power”.

He has completed postgraduate studies in aerospace systems, information technology, project management, astrophysics, and space mission designs. Views expressed here are his own and do not represent those of the Royal Australian Air Force, The Department of Defence, or the Australian Government.

First published by Central Blue, January 23, 2019.